Honours Project

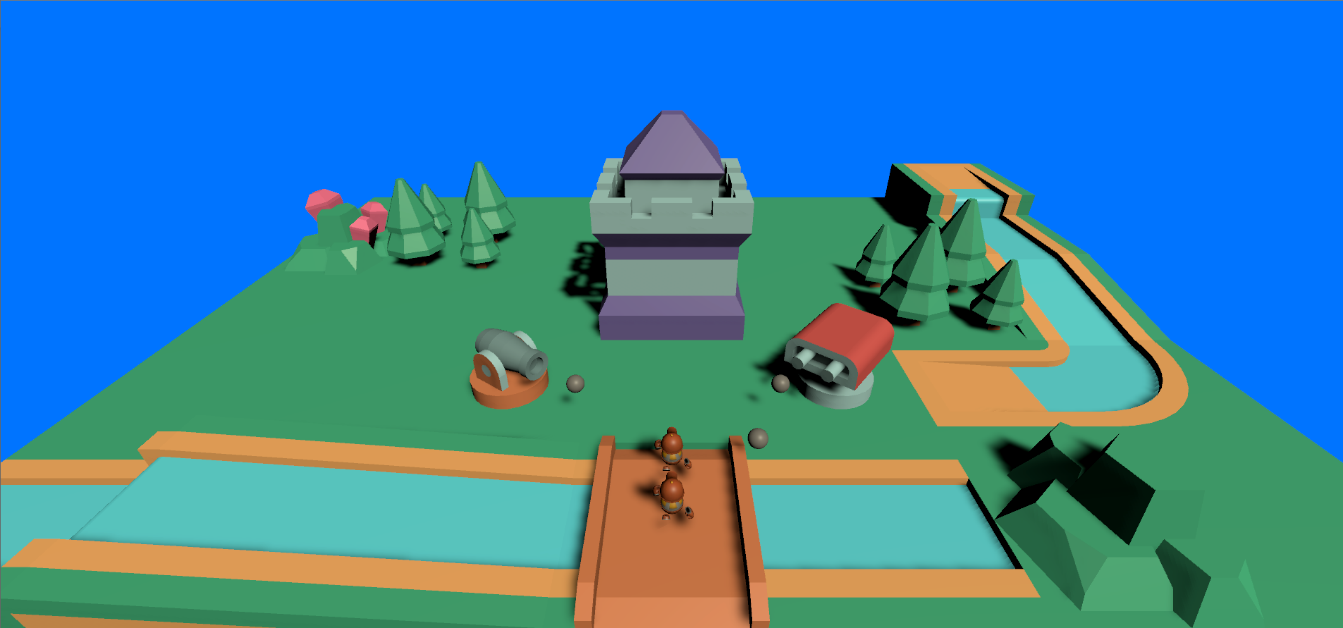

Research Question: How effective can gesture recognition be at aiding accessibility to video games?

-

Objectives:

- To research suitable methods withing machine learning to recognise hand gestures.

- To evaluate how effective gesture control can be for accessibility in games.

- To build a system that allows control of video games through gesture recognition.

Photo by engin akyurt on Unsplash

To better understand how an Artificial Neural Network functions, the first 3-4 months of my honours project were dedicated to creating one from scratch in C++. I created a network that was capable of learning how to identify handwritten numbers from the MNIST database however, when attempting to train on the dataset I created for use in this project, I could not find the hyperparameters for the network to bite on training. This resulted in a network that would descend towards the global minimum but slowly.

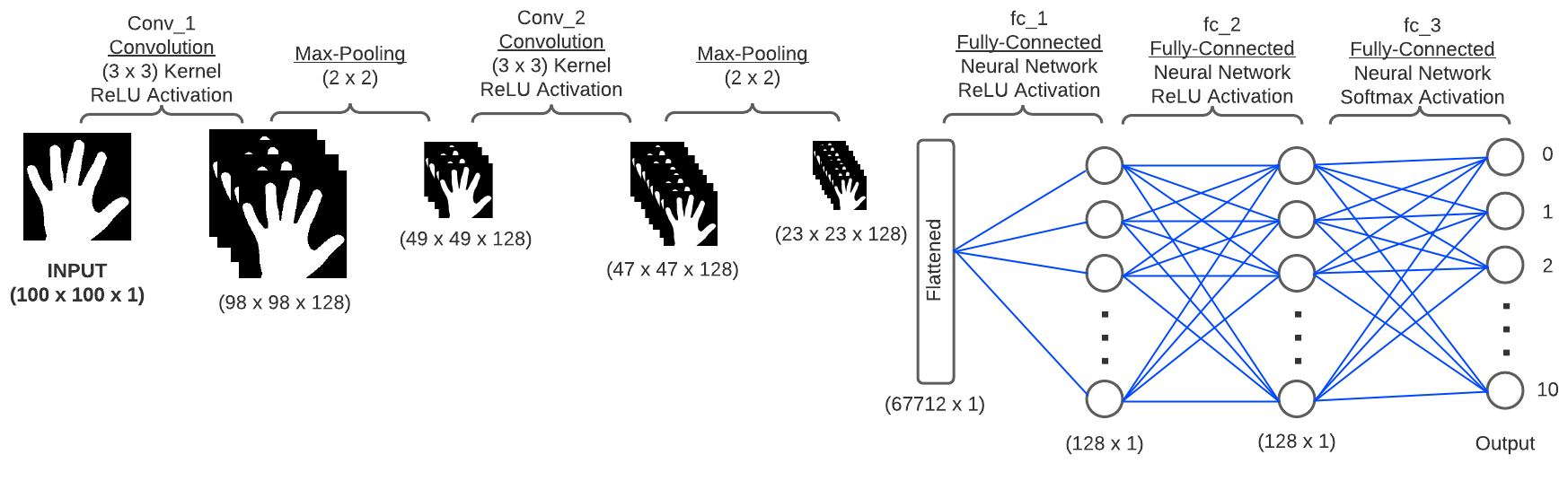

With the deadline quickly approaching, I opted to switch over to using Tensorflow and implementing a Convolutional Neural Network. Below is a diagram I created of the network architecture.

Network Input

I used OpenCV as the API for capturing the input used for the network. The first 60 frames of the program running are used to accumulate average pixels values of the user's background, this allows for background seperation to be used. Below is the five stages an image is processed before being used for input to the network.

A frame is captured in BGR every 25ms, this frame is first converted to greyscale then blurred with a gaussian blur kernel to remove hard edges. The next step is to seperate the user's hand from the frame, this is done by comparing the current frame to the accumulated background average. The final step is to change any pixel above a certain value to white and any below to black, this provides a clear monochrome image of the user's hand.